In case you’re wondering, my Minion pic has nothing to do with anyone here at SFN. The fact that a 15 min drive home after lunch took almost an hour should explain it. Oh and I forgot to mention…there was less than ½ inch of snow on the ground at that time. So for everyone stuck in the snow, keep safe and keep warm. And remember, the idiots have us out-numbered.

THE BUBBLE IS WEAK THIS YEAR

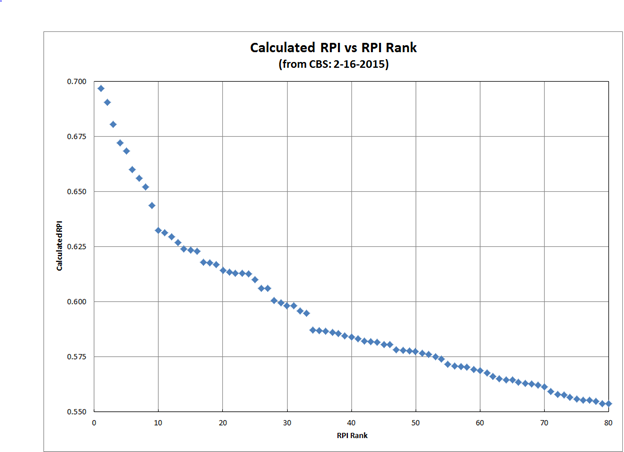

In any event, this week’s update is going to focus heavily on the NCAAT bubble. In last week’s entry, I called the Bubble “weak” without giving any explanation or reasoning, so let’s start with that. Remember that what we usually call “RPI” is actually just a ranking. The actual Rating Percentage Index (RPI) is calculated based on your team’s adjusted winning percentage (home losses and road wins count more), your opponent’s winning percentage (with the games against you removed from their record), and your opponents’ opponents’ winning percentage. So here is a graph that correlates Monday morning’s RPI calculations from CBS with the resulting ranking.

There are a number of conclusions that can be drawn from this graph, but let’s focus on the bubble end of the graph. Starting with #34, there is very little difference from one team to the next. Thus when you lose, you will drop and when you win, you’ll rise pretty quickly. This is what I meant by weak.

It also means that there won’t be much difference between the last four IN the NCAAT and the first four OUT…which has been a noticeable trend over the last several years. This fact also ties into an entry I did last year on whether or not parity exists in college basketball. While I argued that parity does not (and will not) exist at the top of the college basketball world, this graph certainly lends credence to parity at the next step down.

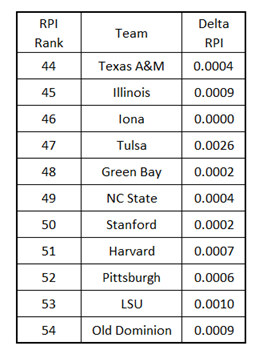

To further illustrate why a win or a loss results in such large moves, let’s look at State’s current portion of the rankings and the delta between one team and the next:

To be clear, the “Delta RPI” shows how close each team is to the one ranked one spot higher. So it should be obvious that the difference between a win and a loss will usually mean multiple positions in the ranking. This small Delta also means that no bubble team is truly IN or OUT even this late into the season.

Hopefully this discussion will also clear up a question that I get nearly every year….How much will State’s “RPI” change if such and such happens? Even if you assume an outcome for a number of State’s games, you cannot calculate how much the ranking would change unless you also assume the results of a dozen or more other teams. Just remember, winning is always good and losing is always bad…and let’s leave the math to someone that is getting paid to do it.

As we move onto our weekly summaries, I’ll highlight a few more examples of a weak bubble.

ACC UPDATE

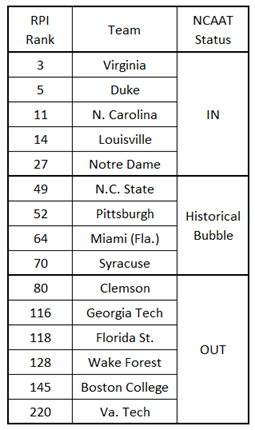

Here are the ACC teams sorted on RPI Rankings (from CBS after games played on Sunday):

Miami lost mid-week and their game at BC got postponed yesterday, while State and Pitt both won over the weekend. So both winning teams have moved above Miami (at least for the time being).

Syracuse continues their downward spiral (as predicted).

Clemson’s RPI is lower than any team ever to receive an at-large bid, but let’s look at their position on the Dance Card:

So we see that Clemson’s resume (even with a horrid RPI) is good enough to sneak several spots above the calculated burst point. I think that this also shows how weak the Bubble is this year.

For State’s OOC wins, BSU is still hanging around and Tenn looks to be fading. Go Broncos!!!

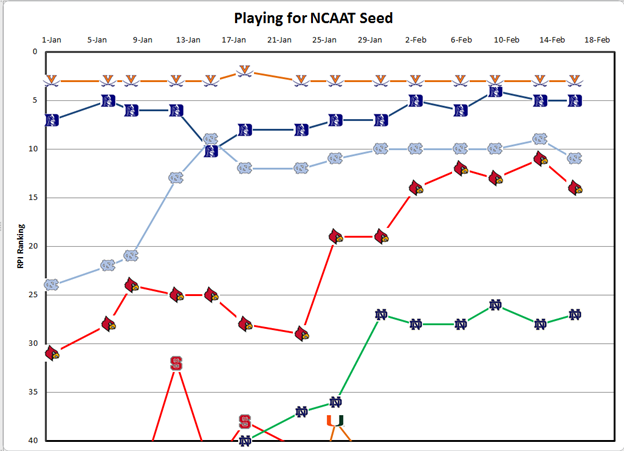

Time for the RPI trend graphs:

More examples of a Weak Bubble:

Mid-week, Syracuse jumped 8 spots with a road win against BC. (BC !!!!)

Pitt moved up three spots with a LOSS at Louisville.

Miami moved up two spots without even playing.

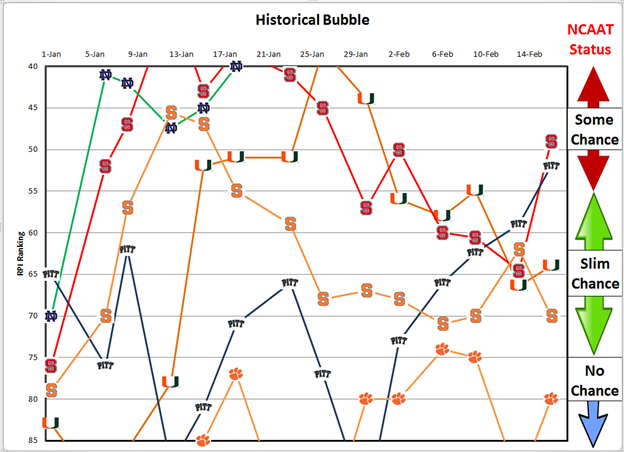

I think that I’ve presented enough data that I can quit beating the “Weak Bubble” drum for now. The bottom line is: win and you are virtually guaranteed to move up. So let’s take a little closer look at the ACC Bubble Teams:

PITT

Through a fortunate bit of scheduling, Pitt had four of the last five games at home. By playing well, they won all four home games, including key victories against UNC and ND. This good streak of BB gives this Pitt team more Top-50 wins that last year’s team got all year. However, the Dance Card still shows them one spot below the burst line. So they need to keep winning and here are their last regular season games:

Feb 16 @No.2 Virginia

Feb 21 @Syracuse

Feb 24 Boston College

Mar 1 @Wake Forest

Mar 4 Miami (Fla.)

Mar 7 @Florida St.

Even with 4 of the last 6 on the road, Pitt should have an NCAAT bid wrapped up before the ACCT starts. Anything less would have to be termed a huge disappointment.

MIAMI

Including Monday afternoon’s win over BC gives Miami a 2-4 record over the last six games and a significantly weakened position versus the Bubble. While they are still above the calculated burst line, losses to GT, FSU, and WF should have Canes fans concerned. The do have the big road win at Duke along with two victories over fellow Bubble teams (NCSU and Illinois), so they are not in bad shape….but need to pick up the pace.

I have my ACC Strength of Schedule spreadsheet up and running and it appears that Miami will end up with one of the easiest conference schedules this year. So any team that wins in Durham, yet fails to make the NCAAT with an easy conference schedule would have to be considered “under-performing”.

Here’s Miami’s remaining regular season schedule:

Feb 18 Va. Tech

Feb 21 @No.12 Louisville

Feb 25 Florida St.

Feb 28 No.15 N. Carolina

Mar 4 @Pittsburgh

Mar 7 @Va. Tech

This stretch of games looks tougher than the last six, so Miami is going to have to pick up the pace if they are going to lock down a bid before the ACCT.

Note that Miami’s win on Monday afternoon is not included in the RPI rankings/graph above, but is included in the ACC standings at the bottom of the entry.

CLEMSON

Clemson dug themselves a huge hole early with a weak OOC schedule (currently ranked #192) and playing horribly with losses to South Carolina (#104), Rutgers (#138), Gardner-Webb (#166), and Winthrop (#219). They do have a few good points to the season with wins against #18 Arkansas and wins against bubble teams NC State, Pitt, and LSU.

I wouldn’t want to head into Selection Sunday with an RPI ranking below what has EVER been selected before. So the Tiggers need to pick up the pace and here is their remaining schedule:

Feb 16 @Georgia Tech

Feb 21 @No.4 Duke

Feb 28 Georgia Tech

Mar 3 N.C. State

Mar 7 @No.10 Notre Dame

NC STATE

State’s road win over Louisville gives them enough “big” wins to get an at-large bid. Now they just need to get enough total victories to secure an at-large bid. So how many wins will that take?

Saying that a 4-1 record will secure a bid is as insightful as saying that water is wet. However, projecting a sure bid with results worse than that runs the risk of being overly optimistic. The bottom line is that the minimum acceptable record will depend on what everyone around State on the bubble does.

Gott hit it right when he said that State could beat anyone remaining on the schedule and could easily lose to any of them. The remainder of the season should prove interesting….and I mean that in context of the old Chinese curse.

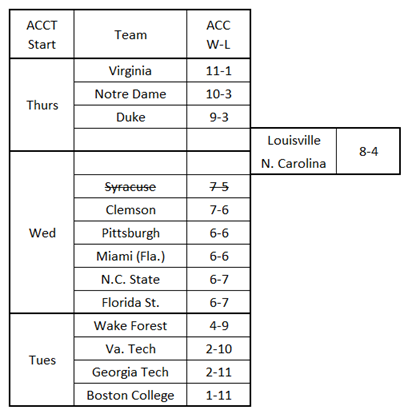

ACCT BUBBLE

I didn’t even know that Miami and BC were playing this afternoon until I pulled up their schedule at CBS Sports. So I’ve updated the standings for the result of that game, but I’m going to publish this entry before the Monday night games are played. So here’s what we have for now:

Thanks to Syracuse pulling out of the ACCT, the “States” are hanging onto a Wed start with a two-game lead over WF. But it’s also interesting to note that Clemson only has a one game lead over both of them.

Last year, everyone that started on the second day of the ACCT had a 0.500 or better conference record. FSU might hold onto their Wed Start, but I wouldn’t bet on them reaching 0.500 this year.